Adversarial Examples That Fool Detectors

Adversarial Examples that Fool Detectors

Computer Vision research from Jiajun Lu, Hussein Sibai and Evan Fabry examines blocking neural network object detection using what appears to look like DeepDream-esque camouflage:

An adversarial example is an example that has been adjusted to produce a wrong label when presented to a system at test time. To date, adversarial example constructions have been demonstrated for classifiers, but not for detectors. If adversarial examples that could fool a detector exist, they could be used to (for example) maliciously create security hazards on roads populated with smart vehicles. In this paper, we demonstrate a construction that successfully fools two standard detectors, Faster RCNN and YOLO. The existence of such examples is surprising, as attacking a classifier is very different from attacking a detector, and that the structure of detectors - which must search for their own bounding box, and which cannot estimate that box very accurately - makes it quite likely that adversarial patterns are strongly disrupted. We show that our construction produces adversarial examples that generalize well across sequences digitally, even though large perturbations are needed. We also show that our construction yields physical objects that are adversarial.

The paper can be found here

More Posts from Laossj and Others

YouTube Artifacts

Latest AR exhibition from MoMAR (who ran a guerilla show earlier this year) returns to the Pollock Room at MoMA New York featuring works by David Kraftsow, responsible for the YouTube Artififacts bot that regularly generates animated images from distorted videos:

Welcome to The Age of the Algorithm. A world in which automated processes are no longer simply tools at our disposal, but the single greatest omnipresent force currently shaping our world. For the most part, they remain unseen. Going about their business, mimicking human behavior and making decisions based on statistical analysis of what they ‘think’ is right. If the role of art in society is to incite reflection and ask questions about the state of our world, can algorithms be a part of determining and defining people’s artistic and cultural values? MoMAR presents a series of eight pieces created by David Kraftsow’s YouTube Artifact Bot.

More Here

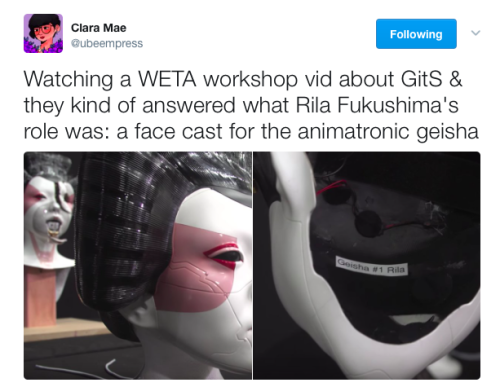

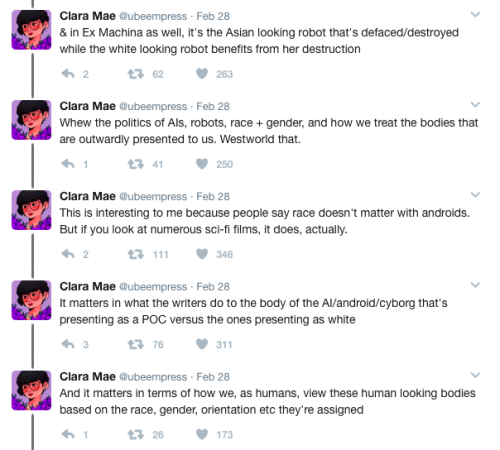

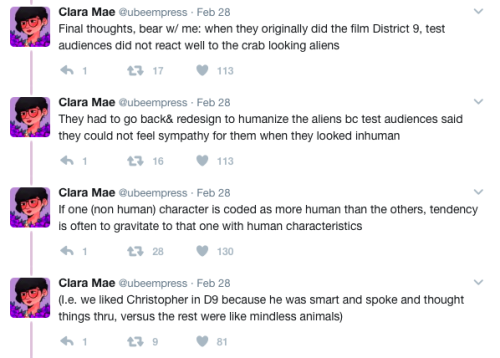

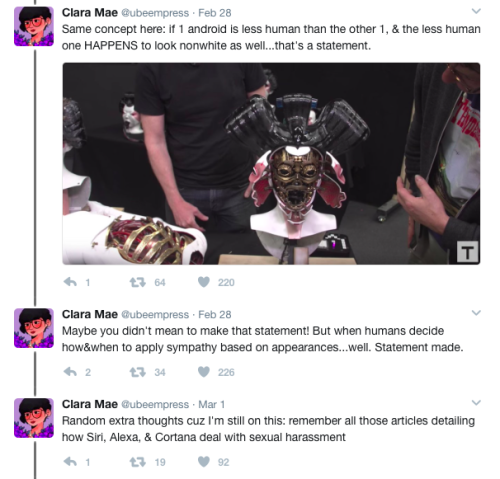

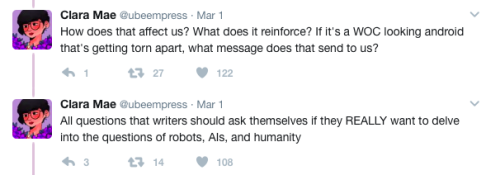

Clara Mae’s brilliant thoughts on race, gender, and AI in film

Creating Face-Based AR Experiences

Apple have just published an example for developers on how to use their front facing camera on the iPhone X for AR apps:

This sample app presents a simple interface allowing you to choose between four augmented reality (AR) visualizations on devices with a TrueDepth front-facing camera (see iOS Device Compatibility Reference).

The camera view alone, without any AR content.

The face mesh provided by ARKit, with automatic estimation of the real-world directional lighting environment.

Virtual 3D content that appears to attach to (and be obscured by parts of) the user’s real face.

A simple robot character whose facial expression is animated to match that of the user.

Link

An intro video can be found here

Polish priest blessing a newly opened “Bitcoin embassy.” Warsaw, 2014.

Adversarial Machines

There’s been some interesting developments recently in adversarial training, but I thought it would probably be a good idea to first talk about what adversarial images are in the first place. This Medium article by @samim is an accessible explanation of what’s going on. It references this talk by Ian Goodfellow, asking if statistical models understand the world.

Machine learning can do amazing magical things, but the computer isn’t looking at things the same way that we do. One way to exploit that is by adding patterns that we can’t detect but that create enough of a difference in the data to completely fool the computer. Is it a dog or an ostrich?

There’s been quite a lot of research into finding ways round this problem as well as exploiting it to avoid facial recognition or other surveillance. And, like I said, there’s been some interesting recent developments that I hope to talk about here.

https://medium.com/@samim/adversarial-machines-998d8362e996#.n7j43766v

Полигонаэдр / Polygonahedron Experiment with rear projection film, Leap Motion and Processing https://vimeo.com/191812588

9 Ocean Facts You Likely Don’t Know, but Should

Earth is a place dominated by water, mainly oceans. It’s also a place our researchers study to understand life. Trillions of gallons of water flow freely across the surface of our blue-green planet. Ocean’s vibrant ecosystems impact our lives in many ways.

In celebration of World Oceans Day, here are a few things you might not know about these complex waterways.

1. Why is the ocean blue?

The way light is absorbed and scattered throughout the ocean determines which colors it takes on. Red, orange, yellow,and green light are absorbed quickly beneath the surface, leaving blue light to be scattered and reflected back. This causes us to see various blue and violet hues.

2. Want a good fishing spot?

Follow the phytoplankton! These small plant-like organisms are the beginning of the food web for most of the ocean. As phytoplankton grow and multiply, they are eaten by zooplankton, small fish and other animals. Larger animals then eat the smaller ones. The fishing industry identifies good spots by using ocean color images to locate areas rich in phytoplankton. Phytoplankton, as revealed by ocean color, frequently show scientists where ocean currents provide nutrients for plant growth.

3. The ocean is many colors.

When we look at the ocean from space, we see many different shades of blue. Using instruments that are more sensitive than the human eye, we can measure carefully the fantastic array of colors of the ocean. Different colors may reveal the presence and amount of phytoplankton, sediments and dissolved organic matter.

4. The ocean can be a dark place.

About 70 percent of the planet is ocean, with an average depth of more than 12,400 feet. Given that light doesn’t penetrate much deeper than 330 feet below the water’s surface (in the clearest water), most of our planet is in a perpetual state of darkness. Although dark, this part of the ocean still supports many forms of life, some of which are fed by sinking phytoplankton.

5. We study all aspects of ocean life.

Instruments on satellites in space, hundreds of kilometers above us, can measure many things about the sea: surface winds, sea surface temperature, water color, wave height, and height of the ocean surface.

6. In a gallon of average sea water, there is about ½ cup of salt.

The amount of salt varies depending on location. The Atlantic Ocean is saltier than the Pacific Ocean, for instance. Most of the salt in the ocean is the same kind of salt we put on our food: sodium chloride.

7. A single drop of sea water is teeming with life.

It will most likely have millions (yes, millions!) of bacteria and viruses, thousands of phytoplankton cells, and even some fish eggs, baby crabs, and small worms.

8. Where does Earth store freshwater?

Just 3.5 percent of Earth’s water is fresh—that is, with few salts in it. You can find Earth’s freshwater in our lakes, rivers, and streams, but don’t forget groundwater and glaciers. Over 68 percent of Earth’s freshwater is locked up in ice and glaciers. And another 30 percent is in groundwater.

9. Phytoplankton are the “lungs of the ocean”.

Just like forests are considered the “lungs of the earth”, phytoplankton is known for providing the same service in the ocean! They consume carbon dioxide, dissolved in the sunlit portion of the ocean, and produce about half of the world’s oxygen.

Want to learn more about how we study the ocean? Follow @NASAEarth on twitter.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com.

Why virtual reality is perfect for creativity

Second part to Nat & Friends look at Virtual Reality tech, this time focused on how it can be a creative platform, including Tiltbrush, 360 video and Blocks:

In this video (part 2 of a two-part VR series) I explore VR creativity tools and how artists and creators are using them. I do a Tilt Brush chicken dance, play with brains, and help YouTuber Vanessa Hill make a video about how your mind reacts to VR

More Here

Part One can be found here

Kavya Kopparapu, 16, invented an app and lens to diagnose the leading cause of preventable blindness

A teenager managed to develop an app that can help diagnose a diabetes-related condition affecting her grandfather.

Kavya Kopparapu’s grandfather lives in India, where there aren’t enough ophthalmologists to help diagnose all of those who could be affected by diabetic retinopathy.

DR is the world’s leading cause of vision loss in people age 20 to 65, according to the International Agency for the Prevention of Blindness, which estimated that 50% of people with diabetes are undiagnosed.

But in the absence of proper doctors, “computers could be used in their place,” Kopparapu, 16, said in a TEDx talk on artificial intelligence.

Alongside her brother and another classmate, she invented Eyeagnosis, a smartphone app that can photograph patients’ eyes and match them to a database of 34,000 retinal scans collected from the National Institute of Health. Read more (8/8/17)

follow @the-future-now

5 Demos Where Code Meets Music

Latest Nat & Friends showcases a selection of web based experiments exploring sound and music (plus a couple of Google assistant easter eggs):

Music is a fun way to explore technologies like coding, VR, and machine learning. Here are a few musical demos and experiments that you can play with – created by musicians, coders, and some friends at Google.

More Here

-

ghostcloset reblogged this · 5 years ago

ghostcloset reblogged this · 5 years ago -

solidlight liked this · 6 years ago

solidlight liked this · 6 years ago -

zorazeal reblogged this · 6 years ago

zorazeal reblogged this · 6 years ago -

luxferis reblogged this · 6 years ago

luxferis reblogged this · 6 years ago -

dinobutt79 liked this · 7 years ago

dinobutt79 liked this · 7 years ago -

ironkiss reblogged this · 7 years ago

ironkiss reblogged this · 7 years ago -

absolute-pain liked this · 7 years ago

absolute-pain liked this · 7 years ago -

abnormoinfo liked this · 7 years ago

abnormoinfo liked this · 7 years ago -

missedthestartgun liked this · 7 years ago

missedthestartgun liked this · 7 years ago -

al-berta reblogged this · 7 years ago

al-berta reblogged this · 7 years ago -

al-berta liked this · 7 years ago

al-berta liked this · 7 years ago -

aguidetowriting reblogged this · 7 years ago

aguidetowriting reblogged this · 7 years ago -

mayormurphy liked this · 7 years ago

mayormurphy liked this · 7 years ago -

5-dollar-angel liked this · 7 years ago

5-dollar-angel liked this · 7 years ago -

5-dollar-angel reblogged this · 7 years ago

5-dollar-angel reblogged this · 7 years ago -

unibombastic liked this · 7 years ago

unibombastic liked this · 7 years ago -

peucetiaviridans liked this · 7 years ago

peucetiaviridans liked this · 7 years ago -

mmarcelineduchamp liked this · 7 years ago

mmarcelineduchamp liked this · 7 years ago -

fidi0001 liked this · 7 years ago

fidi0001 liked this · 7 years ago -

info-cultist reblogged this · 7 years ago

info-cultist reblogged this · 7 years ago -

info-cultist liked this · 7 years ago

info-cultist liked this · 7 years ago -

tele-loves-logicalmagic337 liked this · 7 years ago

tele-loves-logicalmagic337 liked this · 7 years ago -

superior-cocky-german-muscle-god reblogged this · 7 years ago

superior-cocky-german-muscle-god reblogged this · 7 years ago -

apathetic-asshole liked this · 7 years ago

apathetic-asshole liked this · 7 years ago -

inertporcupine liked this · 7 years ago

inertporcupine liked this · 7 years ago -

cervidghost liked this · 7 years ago

cervidghost liked this · 7 years ago -

talviaika reblogged this · 7 years ago

talviaika reblogged this · 7 years ago -

doc-acher liked this · 7 years ago

doc-acher liked this · 7 years ago -

prettygoodmamgu liked this · 7 years ago

prettygoodmamgu liked this · 7 years ago -

thosethreenerdsinc liked this · 7 years ago

thosethreenerdsinc liked this · 7 years ago -

camouflagelion reblogged this · 7 years ago

camouflagelion reblogged this · 7 years ago -

clothmom reblogged this · 7 years ago

clothmom reblogged this · 7 years ago -

kikuidee liked this · 7 years ago

kikuidee liked this · 7 years ago -

dovecandies liked this · 7 years ago

dovecandies liked this · 7 years ago -

will0wis liked this · 7 years ago

will0wis liked this · 7 years ago -

excessgraphite liked this · 7 years ago

excessgraphite liked this · 7 years ago -

micahaphone liked this · 7 years ago

micahaphone liked this · 7 years ago -

thinradline liked this · 7 years ago

thinradline liked this · 7 years ago